Photography, painters and neuroscience

From Snapshots to Picasso — How photographers can learn from painters and neuroscience

Documentarians strive to create a transparent window on reality. Artists take you on a guided tour of their own reality.

About Mark Heyer — see below

On a recent trip, I began to explore a new artistic style for creating photographs. Many people commented that my photos looked more like paintings. This made me wonder, how could people mistake my photographs for paintings? What is different about these photographs?

Then, the question I asked myself is, why are paintings deemed more attractive and valuable than photographs? Why do people prefer to hang paintings on their walls, even cheap copies, rather than photographs?

Answering that question integrated insights from my knowledge of information, recent study of neuroscience and subsequent study of famous painters. This article explores the link between art and human perception, which is fundamental to understanding all visual art and points to a new future for photography.

The common thread of all great visual artists is that they intuitively understand the neurobiological processes of human vision.

Being humans themselves, it is hardly surprising that great painters, from cave dwellers on, created images that appeal to the underlying biology of human vision.

Neurobiology — The human eye is not a camera

The eye sees more than the camera can record — the mind imagines more than the eye can see

Our vision is not simply an image projected by a lens onto some part of our brain. Vision is a very complex modular system, the visual cortex, of neuronal sensors, filters, recognizers, pleasure centers, feedback, action stimulators and memory.

The brain has at least 20 specialized brain modules (parts of the visual cortex) just for handling vision. There are six different modules dedicated to face recognition alone. Incoming signals are modified in real time by feedback from our brain about what we “expect” to see. We humans build models of our visual and physical world and test our incoming visuals against them. Neuroscientists describe two distinct pathways for our vision processing. The ventral, or lower, pathway is known as the “what” pathway. This path analyzes images for shape, color, etc. The dorsal, or upper, pathway is known as the “where” pathway, which analyzes motion and builds our model of the environment.

How do we see?

The most basic elements of vision are line, shape, angle and color.

Incoming images are routed to a part of the visual cortex that contains neural network “Gabor filters.” Technically, these filters perform two dimensional Gaussian filtration. Put simply, they detect edges, angles, shapes and patterns. These patterns are then sent onward to different modules dealing with shape, face and eye recognizers, among others. Unlike a camera, the eye responds to the contrast in a scene, not the absolute brightness of its elements.

In the grainy photograph, any human being would know that these are faces. Even with minimal information, faces can be recognized easily. This picture is similar to what your brain’s shape recognizing Gabor filters see if you were looking at a detailed, full tonal range version of the same photo. However, your brain can recognize the grainy version even faster because it has high contrast and less detail to process.

Unnecessary detail is expensive for the brain to filter out and takes more time. Neurobiology research indeed shows that facial recognition is actually better in the slightly out of focus peripheral visual field than when you are looking directly at a person.

The images formed on the rods and cones of the retina are dispatched to a multitude of visual cortex modules. Along the way, the stream of nerve impulses is simplified, compressed and reduced at each stage, eliminating unnecessary information. The visual system has evolved to gather the most amount of information at the lowest possible energy cost.

In line with this efficiency theme, when the brain recognizes something as familiar, it ignores further information coming from the eyes. We all have the experience of being in a place with some regular background noise. In time, we don’t even notice it any more. Vision works the same way. Only images that are different and unusual and unknown attract the most attention.

Color perception

Color perception is handled by an entirely separate part of the brain, as different from the Gabor filters as hearing is from smell. Most people have three primary “color” cone cells in the retinas of their eyes. These sense the colors red, green and blue, a somewhat simplified definition. The human eye can see a range of up to one million colors, known as color gamut.

The color processing modules of the brain are separate and very different from the shape recognizers.

In fact, among mammals, only humans and some primates have well developed three color vision. Clearly, color was important to our long term evolution. Some insects and birds (like pigeons) have five color vision, allowing them to see 100 times more colors than normal humans. At the other extreme, marine mammals can only see one color.

The first color processing happens in the retina itself. Information is sent along the optic nerve to the optic chiasma, where nerves from the left and right eyes switch to opposite sides of the brain. Then on to the thalamus and the lateral geniculate nucleus. From there, they progress to the primary visual cortex, known as V1, where complex processing takes place. Then on to V2, V4 and finally to the inferior temporal lobe, where the color information is finally merged with shape information to tell our conscious brain what we think we see. There’s a whole lot of processing going on, in the blink of an eye.

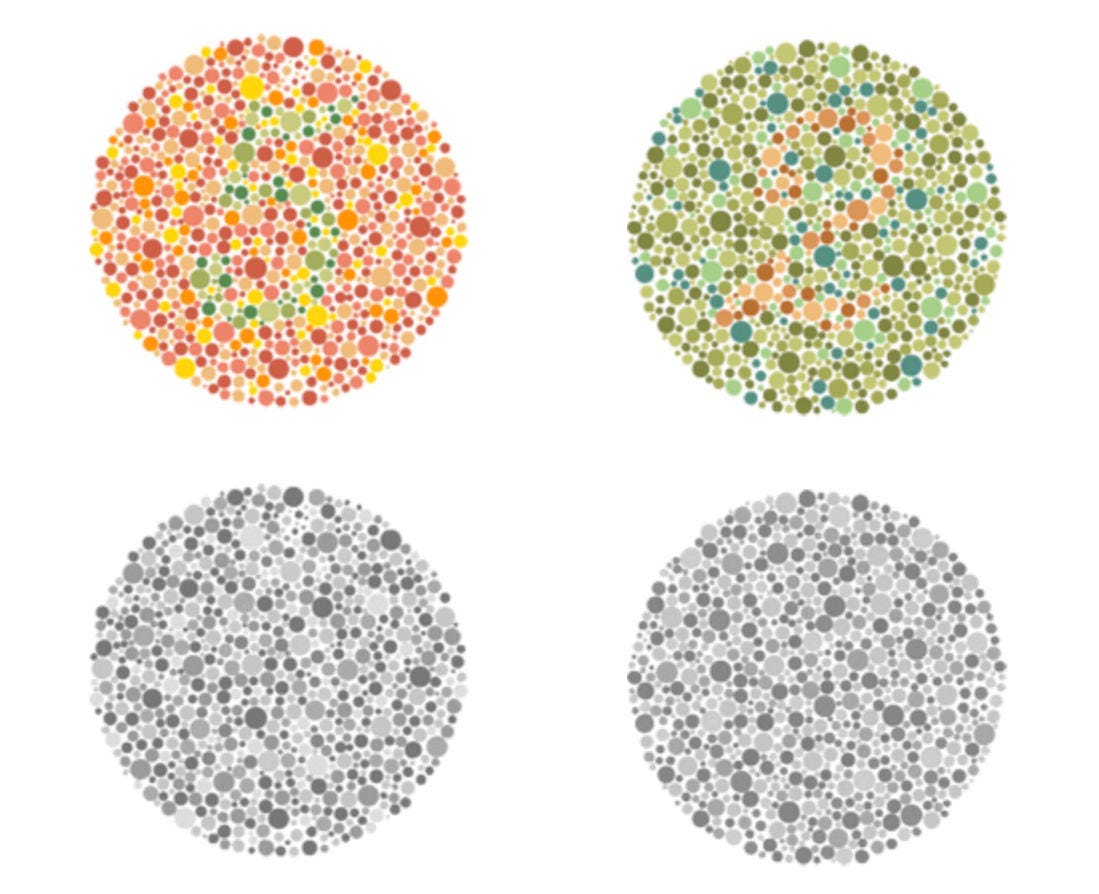

Our color perception can distinguish different colors even if both reflect the same amount of light — have the same luminance value. However, the black and white shape recognizers see the two luminance values of the colors as the same, as in this illustration.

It is important to note that color perception varies greatly among individuals. Some are “color blind” and cannot see certain colors at all, or see reversed colors.

After line, shape and color recognition come the face, eye, and object recognition modules. These are important for high level recognition and very well developed for specific vision tasks.

Line and shape recognition is key to the neurobiology of human vision. To paraphrase Picasso, color is a “nice to have” feature of paintings while shape and line are essential.

How our eyes see the world

A camera records a scene in a single momentary exposure. For humans, seeing a scene requires multiple eye “exposures.” This is necessary because the angle of view for in-focus human vision is only about 1 to 10 degrees.

Our eyes build up an image by physically scanning across a scene, stopping at “points of interest” in a series of fixed gazes known as saccades. During movement from one fixed gaze to the next, our brain shuts off our conscious vision. This stepping ability is what makes it possible for you to read text. At each stationary step, the eye adjusts both its iris and the chemistry of the retina to optimize the exposure. This allows us to see details in both deep shadows and bright sun within the same scene.

The “where” channel of vision, provides us with motion perception and helps build our model of the environment around us. This channel is important for mapping the place we are in, walking, driving, dancing, sports and all the things we do that involve places and moving.

The ability to predict a composition and click the shutter at the “critical moment” is essential for photography. It requires building a moving perceptual model of the scene and where the subject and photographer will be when the picture is to be taken. Motion of the subject and rapid positioning of the artist is less important to the more contemplative practice of painting.

Painting

Painters and other artists have instinctively exploited the neurological characteristics of human vision from the beginning of time. Let’s looks at how they do it.

From cave paintings to Rembrandt, Monet, Van Gogh, Picasso and Keane, we can see a progression in the efforts to more directly address — and challenge- the discrete human visual modules as the artistic route to the deeper mind of emotion.

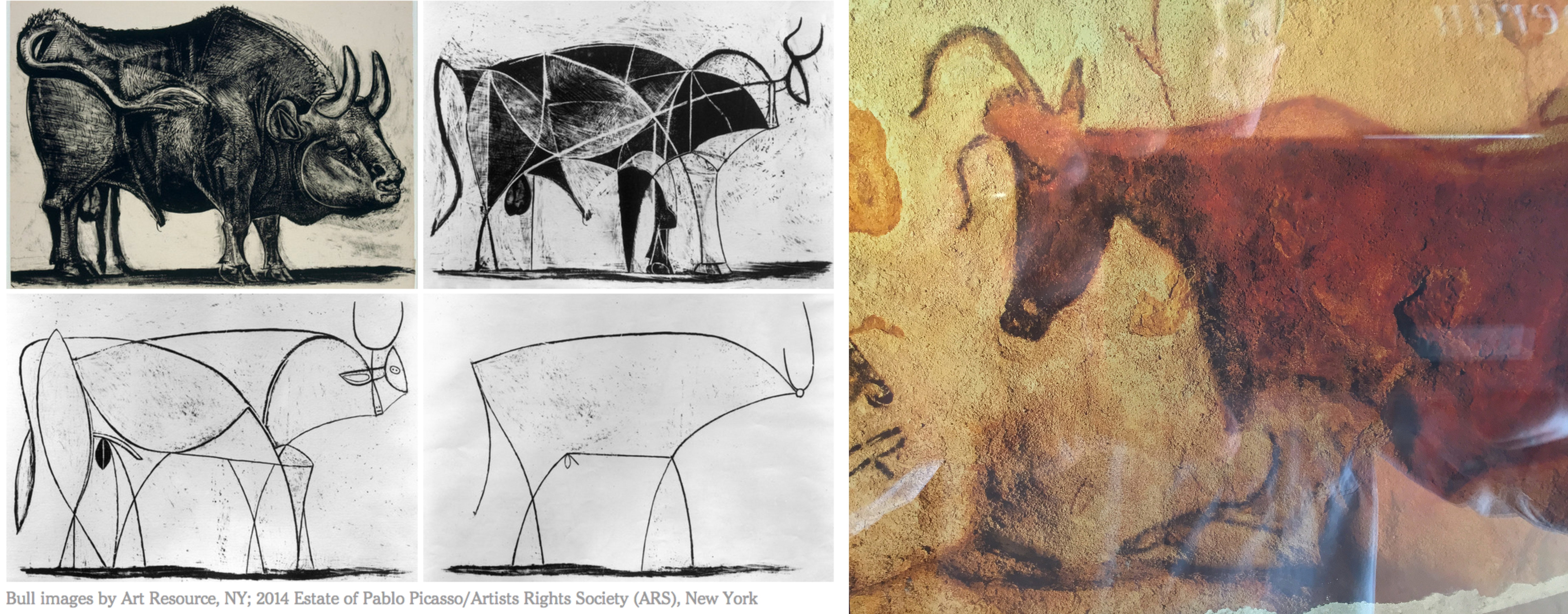

The earliest cave painters mastered line, shape and color to create simple but compelling images that still have meaning for us today.

As a painter, Rembrandt was especially known for rendering realistic and visually pleasing faces.

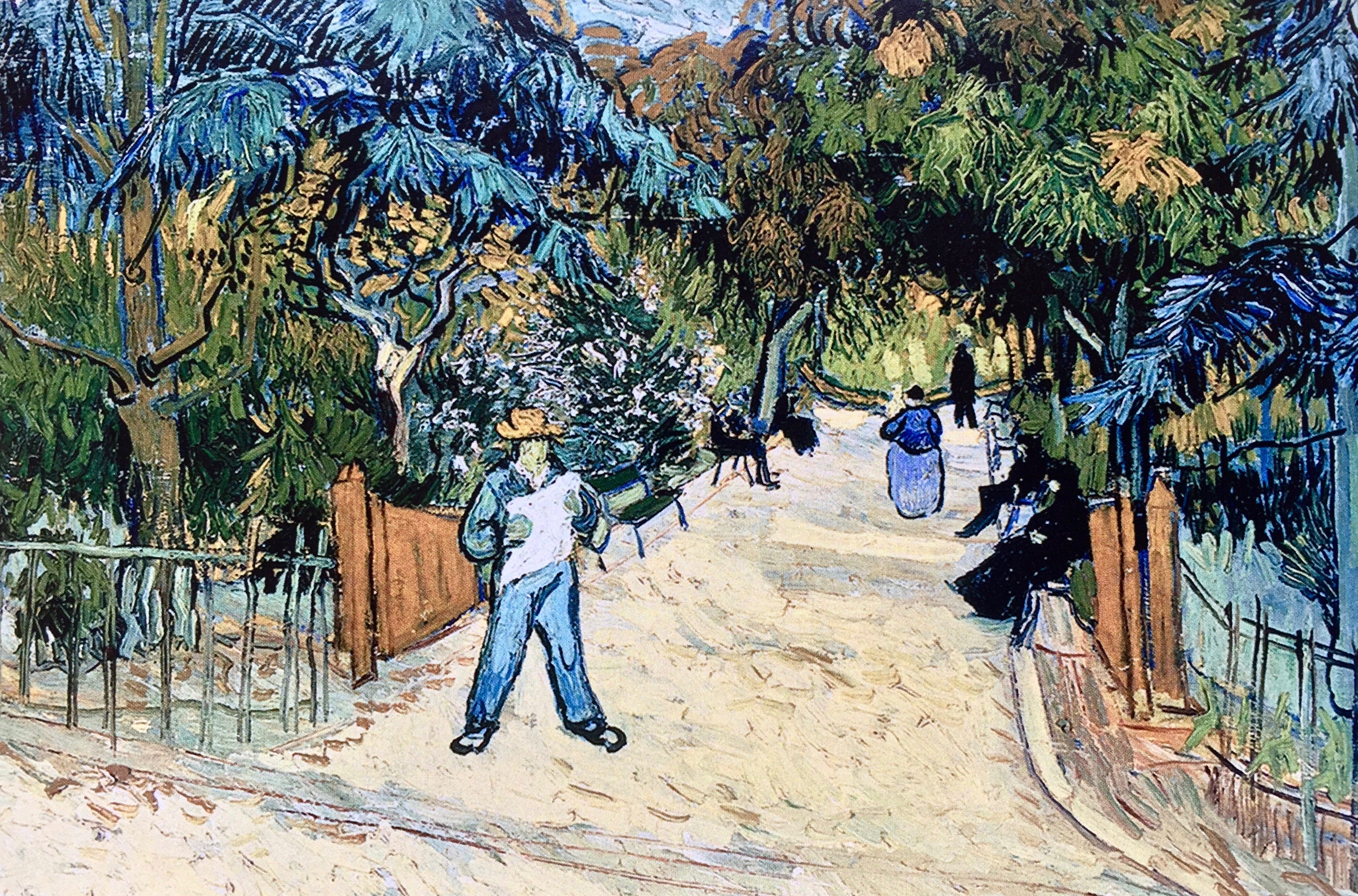

Monet pioneered the use of color to the exclusion of most line and shape, creating apparent detail from small dabs of paint. Van Gogh, who suffered from mental illness, is cherished for getting his intellectual mind out of the way and opening a spigot from his raw perceptual mind, letting line, shape and color gush out unpredictably onto the canvas. It’s interesting to note that both of these timeless artists were reviled by the critics and art world of their day as being “unrealistic” and “garish.”

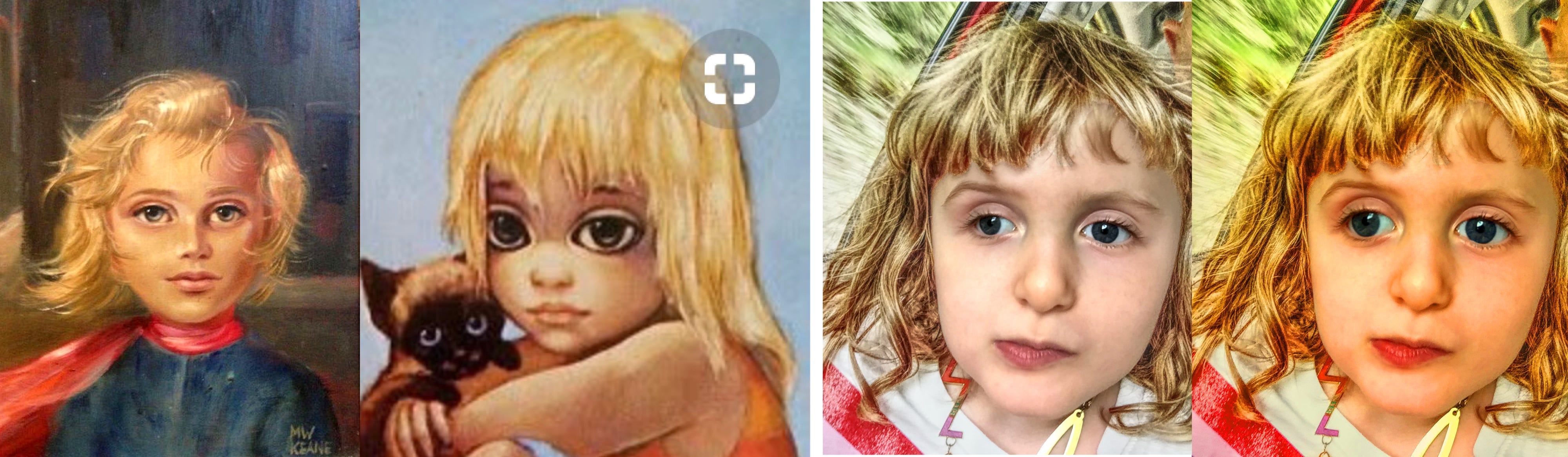

Picasso started as a realistic painter. He began deconstructing his images, first in color, his blue period, then Cubism, which explored the deep roots of how we recognize images. In the blue painting above and this lovely cat painting, he plays with our shape, color, face and eye modules. Our brain receives each of the elements separately and puts them together despite the evident unreality of the image.

In his exploration of the limits of human perception, Picasso ran the clock backwards with his famous series of bull drawings, bringing him all the way back to the first cave paintings, talking directly and only to the brain’s shape recognizing Gabor filters.

Although he could not have known about the neurobiology of the brain scientifically, Picasso had a very clear understanding of its elements and how to address them. Looked at from that perspective, one gains a whole new insight into his work.

Margaret Keane, a United States painter of children with huge eyes, made a fortune during the 60s and 70s selling millions of copies of paintings that serious artists and critics deem “junk.” Yet, at 80+ her work continues to develop and sell. Why is her art so popular? Her life story is the subject of the movie Big Eyes.

The Keane paintings on the left below, engage line and shape, face, eye and color recognition. Our brain knows that it is not “realistic” but puts the pieces together and accepts it anyway.

The Keane paintings show the importance of eyes to engage human emotion. The fact that the eyes are so exaggerated is precisely what attracts her fans. Subtle changes in a photograph can create the same emotional stimulation. In my photographs on the right, both faces are exactly the same size, but the second face looks bigger. Only the color saturation and size of the eyes have changed.

Capturing an image vs creating an image

There is an obvious difference in the way photographs and paintings are produced. A photographer “takes” a photograph. At that instant it has all the detail and composition it will ever have.

Painters begin with a mental composition, infinitely flexible in all of its vectors — time, space, perspective, realism, etc. Detail is added piece by piece until the artist determines that it is sufficient, that the experiment is over, or just that they are bored.

Deconstructing the paintings of Van Gogh, for example, you can see how he simply outlined many of his compositional elements in crude black lines. In the first image above, Van Gogh includes a fence as simply a rough outline in black, not even bothering to fill it in. The main character has no face and people on the path are just little blobs of black paint. Our Gabor filters recognize the shapes and our brains fill in the detail from our own imagination.

In the house and fields painting, filled with strong colors, the bushes next to the house are suspiciously the same shade of blue as the house. Did he just have left over blue? Our brains accept blue bushes, extreme colors, distorted perspective and almost abstract shapes without question, and in fact find them attractive. Remember, the brain only pays close attention to things that are unusual or unknown. The very irregularity of Van Gogh’s work is what makes it so interesting that we can’t take our eyes off of it.

The photography revolution that is upon us

Since its inception in the 1830s, the main goal of photography has been to create the most transparent possible window on reality — to portray a scene with such fidelity that the viewer perceives it as they would the original scene.

In fact, the photographic community is historically split between those who favor strict depiction of the scene photographed (e.g. Ansel Adams, Imogene Cunningham, Edward Weston) and those who might interpret the subject by modification of the image.

Photographs are created by a photosensor and stored on a recording medium for later display. Every aspect of the system is designed to produce the most technically accurate reproduction of the scene — within the limits of the recording and display technology.

Cameras only record a limited tonal and color range at a particular focal length and exposure. Photographic composition and detail are fixed at the moment of exposure.

The massive adoption of smartphone/camera technology is creating a new era of computational photography. With modern software tools, we can easily manipulate the original photograph. Along with new display technology and processing software, our conception of what photography is and how it works is rapidly changing.

Combined with an understanding of the neurobiology of human visual perception, photographers are now capable of artistically addressing human perception on the same level that painters have for millennia.

This leaves the question posed at the beginning — can photographs become paintings? The facile answer is no. The two media are different in conception and execution as we discussed. Simply putting a “paint brush” filter on a photograph does not make it art.

It would seem that painters, starting with a blank canvas and the unlimited human imagination, would have an insurmountable advantage over photographers for creating evocative works of art. Painters first construct the scene, real or imaginary, using mental imagery. The images they render are created by their own unique mental process of vision, guiding their hands, with pigments, brushes and tools, across a canvas. They take us on a personal guided tour of the subject.

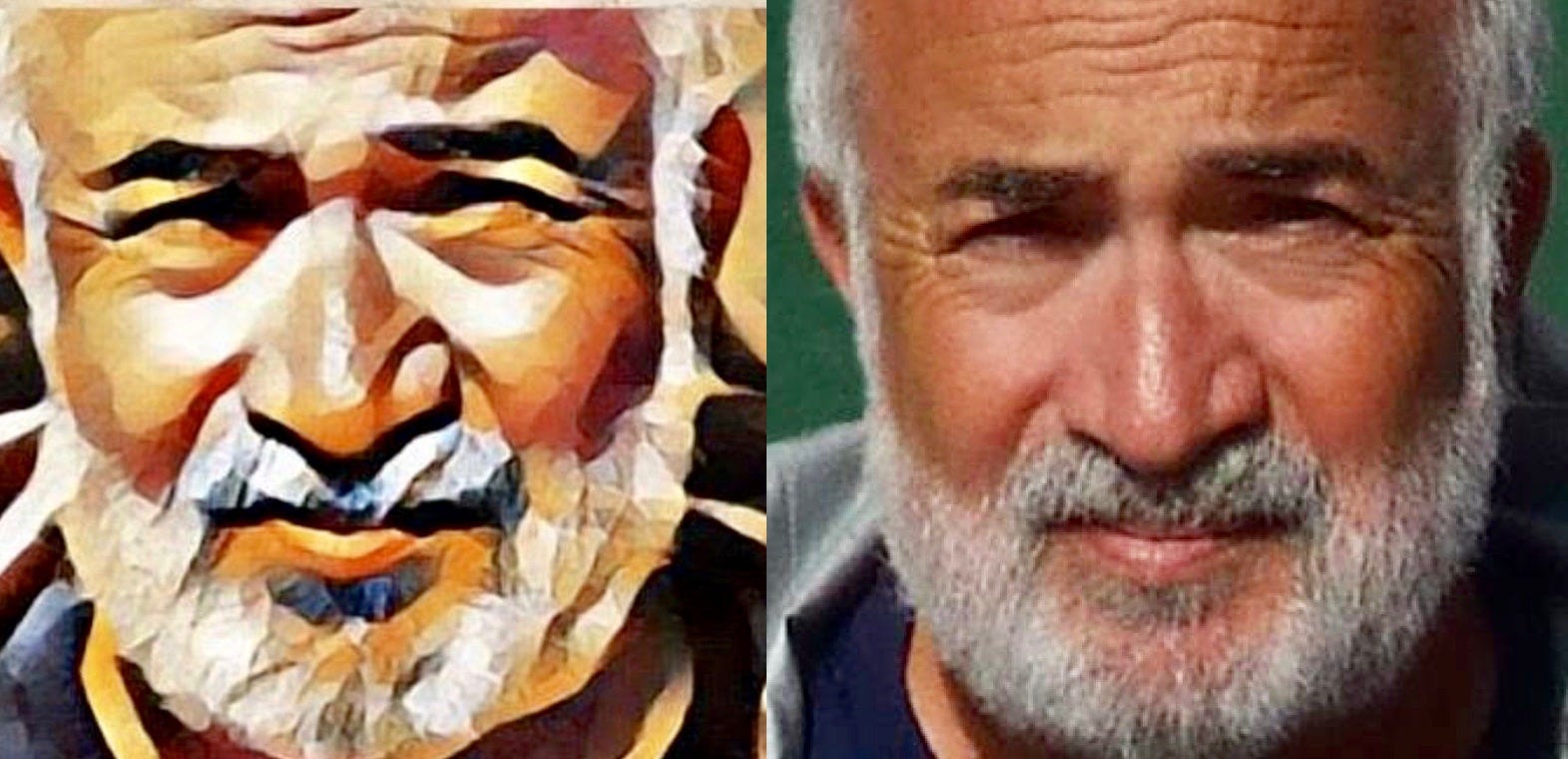

Which of these two images is more technically accurate? Which would you hang on your wall? Even though the painting lacks photographic detail, it is more attractive to the human eye — because it is a product of human interpretation which appeals directly to the brain’s image processing modules, not just a mechanical output from a two-dimensional image sensor.

Taking photography to the next level

My analysis suggests that knowing the principles of visual neurobiology can assist artists to create attractive images using any medium — based on scientific principles combined with their own imagination and insight.

For photographers, understanding and interpreting scenes as their own eye sees, not as the camera sees, is key to creating images that rise above mere documentation. Painters have been instinctively doing this for millennia.

The resulting works will be neither photo nor painting. They will combine the technical sophistication and detail only available in photos with specific artistic manipulations that make them more attractive to the neurophysics of the human brain.

This is the challenge — creating a new and vibrant medium of artistic expression, combining the technical capabilities of photography with the artistic sensibilities of painters — taking our viewers on a personal, interpretive tour through our human vision of the world.

About Mark Heyer

In 1956, at age eight, Mark Heyer built his own pinhole camera to shoot tabletop scenes of moon landings by his model rockets. At 18, he was admitted to Art Center College of Design’s prestigious photography program, established by Ansel Adams. He worked on the West Coast as a commercial photographer, on feature films and in television.

By 1980, his interest in combining photography with computers led him to become a pioneer of interactive media working for Sony Corporation in New York. He introduced the terms “grazing, browsing and hunting” to describe the three ways that humans gather information. He lectured widely over the years, including Harvard, MIT, the Stanford Graduate School of Engineering, the Library of Congress, Department of Defense, IBM corporation and many others. His teaching included a graduate course at Lehigh University and courses on the theory of multimedia at San Francisco State University.

In 1990, Mr. Heyer designed and used one of the first non-linear video editors at the Apple World Wide Developer Conference. For the US Department of Energy, he designed and built a system for photographing the inside of a nuclear reactor as it approached meltdown. He established the field of infonomics, the study of how people use and value information. In 1996, Heyer co-founded the Silicon Valley World Internet Center, a showcase, think tank and collaboration laboratory for internet invention.

In 2014, Mr. Heyer renewed his interest in the connection between information and thermodynamics. Working with Professor Adrian Bejan of Duke University, originator of the thermodynamic Constructal Law of physics, he formulated, presented and published a new paper, The Constructal Theory of Infonomics. This study reveals the fundamental information and thermodynamic principles that govern all life forms, including human social life.

In the quest to understand and predict the human impact and uses of advancing information technology, Heyer studied the neurobiology of Dr. Peter Sterling, (Principles of Neural Design), whose pioneering work focuses on the information and thermodynamic physics that govern the evolution and function of all nervous systems.

This short article is an introduction to Heyer’s current study. It shows how the neurophysical principles of human vision can be applied to understand art and improve the practice of photography.